Transparency vs. Black Box AI: Navigating the Landscape of Feedback Analysis

Around 2014, I was an AI consultant when several companies asked if I could help them use AI to save time analyzing open-ended survey responses. Since survey responses are traditionally coded by market researchers, I spoke to several of them about how AI can help. They all raised the same concern: I don’t want a black box!

Fast forward 10 years, and the AI that most companies use today, is basically a black box. And most users don’t care.

What’s happening here? Why have we stopped caring about the lack of transparency of AI? Does it still matter when it comes to analyzing survey feedback, or all kinds of unstructured feedback? Let’s explore.

Why do market researchers want a transparent box? Transparency means the results can be explained. Explainability supports understanding and builds trust. The researchers need to trust that AI analyzes the data correctly. The researchers also need to tweak the AI’s decision making so that the results fit their reporting needs. This specific reporting nuance is unknown to the AI.

For the past 20 years, customer feedback management solutions attempted to solve the analysis of open-ended responses and other unstructured feedback in various ways:

- Word clouds, aka “the mullets of the internet”.

These sometimes revealed users’s preference for mentioning particular words, e.g. “helpful”. It’s kind of transparent because it’s simplistic.

2. DIY rule-based tagging or full-blown industry-specific taxonomies of rules.

This is more useful than word-clouds, because it’s scalable and transparent! You can edit a rule any time. That said, it’s not actually AI. You need to tell the system what to find in the data. Some rules could get quite complex and unmanageable. It takes time to create and maintain them.

3. Supervised categorization

Where you need to train the system by giving it examples. Definitely AI, but not transparent. If a system makes a mistake, you cannot easily identify this, and you cannot tweak it.

I learnt that the more transparent the solution was, the more likely people were to buy or use it. So rule-based taxonomies “ruled”! The more work people put into crafting those rules, the less likely they were to abandon all that hard work for the promise of AI that could do it for them.

When I started Thematic, my goal was to preserve the transparency of rule-based approach, while harnessing the power of AI. We could have built AI that used rules, but rules are difficult to understand and maintain:

IF((friendly OR friendliness) NEAR (staff OR people OR lady OR man OR service) TAG friendly service.

Complex rule:

IF((friendly OR friendliness OR frendly OR warm OR kind OR nice) NEAR/10 (customer OR client)~1 AND (support OR staff OR help OR care OR assistance OR people OR lady OR man OR service) NOT (user OR user-friendly) TAG friendly service.

So, instead, at Thematic, we looked at a different approach to building AI. We wanted a way to look into the AI’s decision at multiple points during the analysis process so that the researcher could tweak things. Here’s what we considered in our approach:

Retaining Key Words.

Instead of discarding certain words as traditional AI approaches did, our method ensures that it’s a configurable list.

Domain Adaptability:

Pre-trained word embeddings are powerful, but can miss domain-specific nuances. For certain domains, users can choose to use custom language models tailored to their context. Our thematic analysis method adapts to every industry and type of feedback.

Synonym Control:

We make it easy for expert users to tweak or customize synonym mappings, addressing any instances where the AI may inaccurately equate certain words. This helps preserve precision and context.

Theme Identification Transparency:

Thematic's approach reveals how and why themes were identified by the AI and gives users the ability to modify them. This fosters transparency and empowers users to tweak results according to their needs.

Taxonomy Flexibility:

We give users the freedom to adjust the AI-generated taxonomy, to personalize it to their organization and ensure better alignment with specific project requirements.

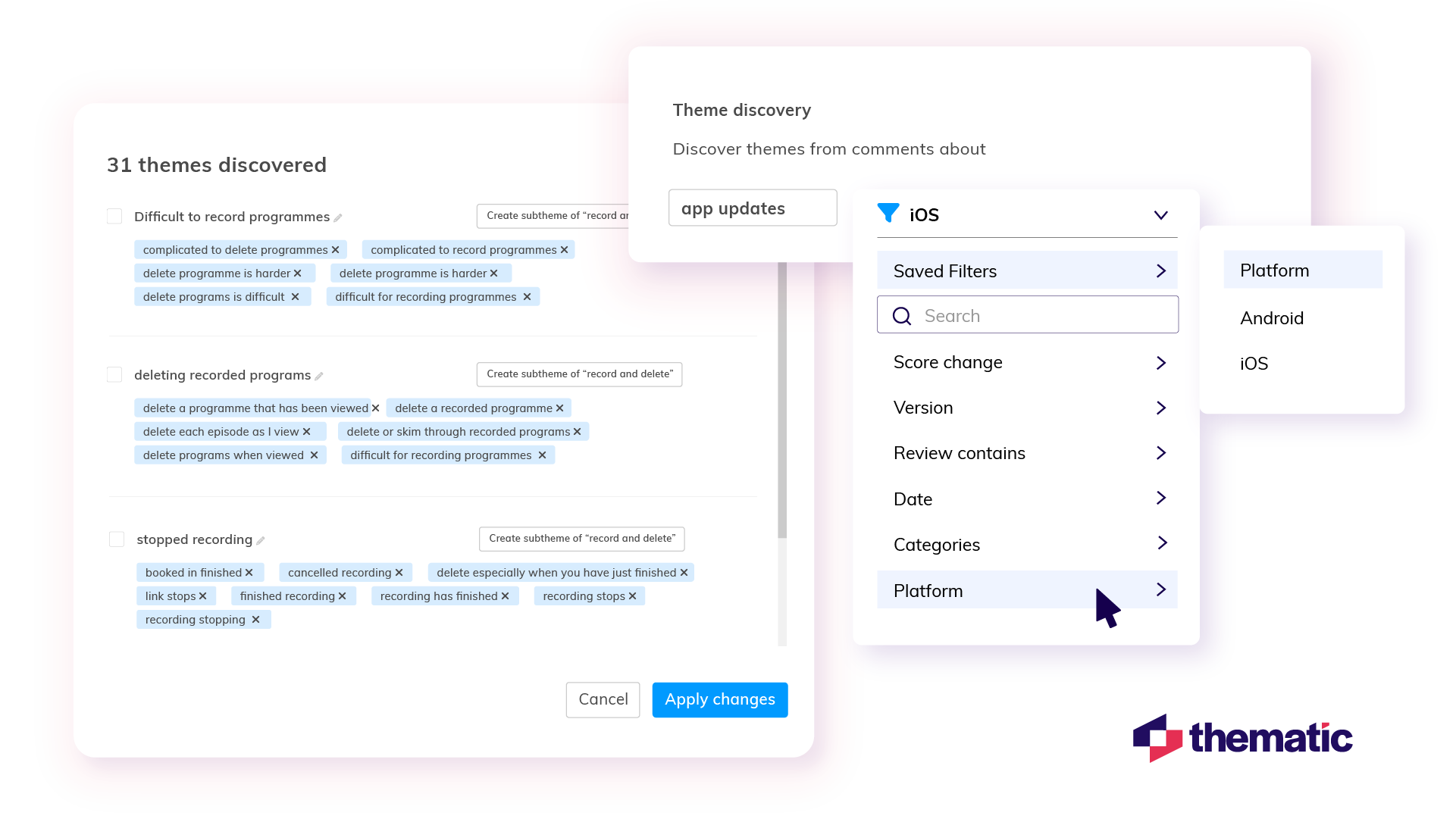

Custom Granularity:

Users can go deeper into themes of their choice beyond the AI's initial analysis, with our Theme Discovery tool. This empowers them to uncover additional insights or nuances that may not have been initially surfaced.

User-Generated Themes:

Our system gives users the power to introduce their own themes that the AI may have overlooked. Also, the AI assists in discovering alternative references or expressions related to these user-generated themes. This ensures the analysis is rich and comprehensive.

In summary, Thematic breaks down a complex process of feedback analysis into many tweakable sub-steps. This provides both flexibility and increased trust through transparency.

This approach we built became extremely popular, and recognized as unique in the industry. We started beating the pre-canned taxonomies and winning customers from incumbents.

When GPT3.5 was launched, researchers started to use it to analyze open-ended survey responses. And it felt like magic! You didn’t need to teach it synonyms and abbreviations. It could deal with extremely messy text and misspellings. It understood irony and sarcasm!

I remember reading a tweet by someone saying:

Imagine that you are an ornithologist and one day you realize, we “solved birds”! That’s what the arrival of GPT3.5 felt like for anyone who works in the Natural Language Processing space. LLMs easily solved a huge number of language problems that seemed too hard to fully solve for decades.

As a result, at Thematic, we immediately started replacing certain tasks that were previously either impossible or done poorly with traditional AI methods with LLMs. But we also asked ourselves: If language analysis has become more accurate, in fact nearly perfect, does this mean that we still need customer feedback analysis with LLMs to be transparent?

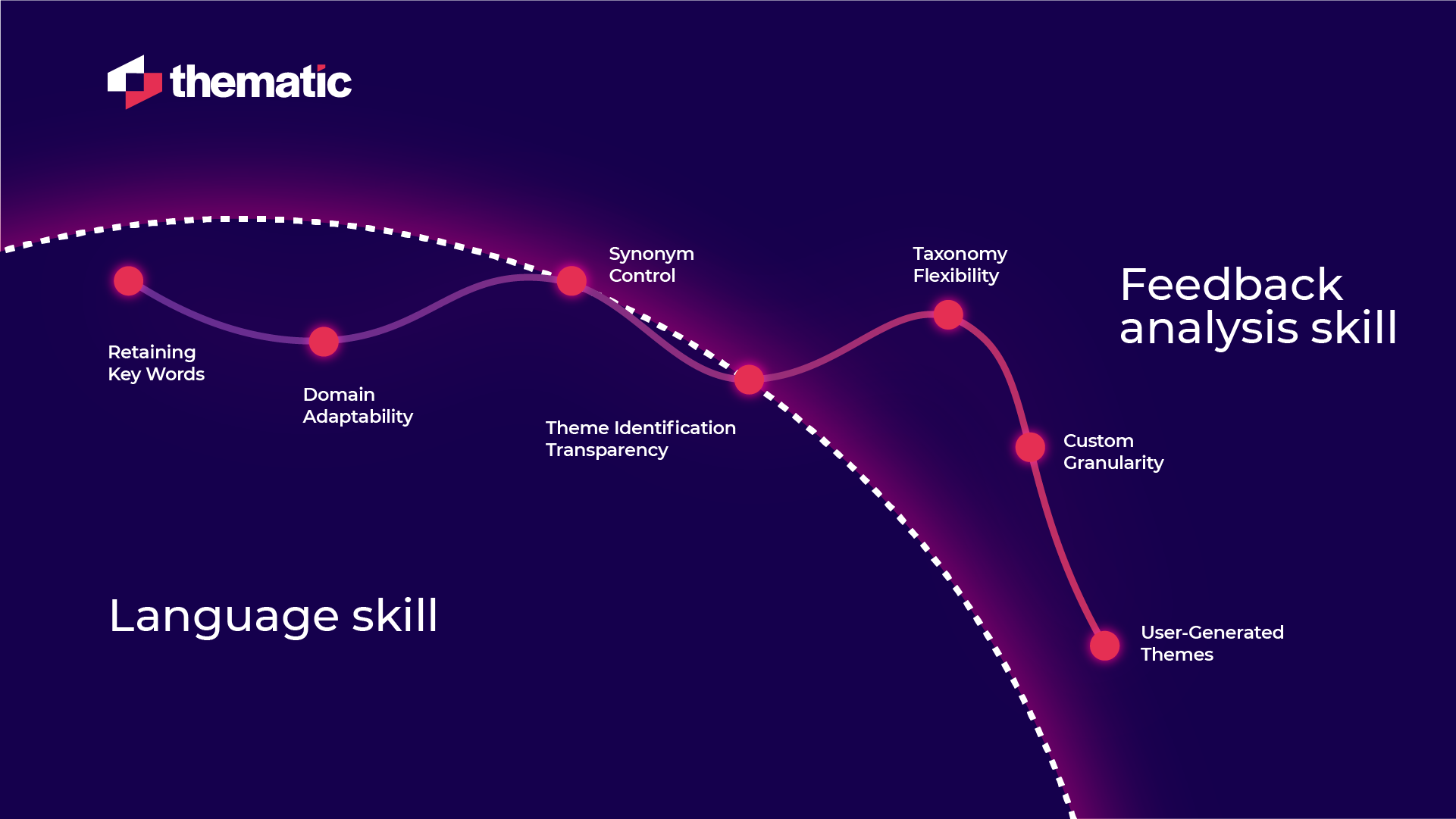

Yes, it does. I view this as two separate skill sets: Being able to speak the language and being able to analyze feedback. I don’t need transparency into how LLMs understand text. But I need transparency into how the feedback is analyzed. Because ultimately, we make decisions based on this analysis.

Imagine a clever intern who joins your team. They can speak the language and are great at writing, but they don’t have market research skills and they don’t know much about your industry, your company and your department’s goals.

- Retaining Key Words - Language skill

- Domain Adaptability - Language skill

- Synonym Control - Language and Feedback analysis skill

- Theme Identification Transparency - Language and Feedback analysis skill

- Taxonomy Flexibility - Feedback analysis skill

- Custom Granularity - Feedback analysis skill User-Generated Themes - Feedback analysis skill

It still makes mistakes

Researchers believe that the predictive nature of generative AI approaches mean that AI does not fully understand the meaning of text. That’s a valid point. We’ve seen on many occasions that it pulls out a specific thing one of the survey respondents or reviewers mentioned as a trend. This needs verifying. I believe that it will get smarter and won’t make as many mistakes fairly soon. But the need for some degree of verification and debugging its path to making a decision will remain.

It still does not know the nuance of your business, and you need to teach it.

Think about delegating a task to a person. Sometimes it’s just faster to do it yourself rather than teaching another person all the context and background they’ll need to do it correctly. There’s always a trade off. For example, I once analyzed feedback from my son’s primary school’s parents using GPT4. From AI’s output I could quickly see the mistakes it made and tweak them. If you were to tell me, teach AI all there is to know about your son’s primary school and things people might mention in the feedback, because you won’t be able to tweak the results, I’d struggle. You don’t know the assumptions it has. Just like a human, or an intern you’d hire.

It is not deterministic.

Imagine a company that decided to push 100s of surveys into an LLM and then interrogate the data via a simple, Google-like, question interface. You ask it a question, and it tells you the answer. On the surface it looks perfect. Until two different heads of department get two different answers for the same question. Which one is correct? Or let’s say we need to compare feedback month to month. Consistency is critical. This is why being able to trace the analysis back to verbatims will remain important in AI-powered feedback analysis.

We’ll see a shift in what people can delegate to AI.

We can now trust it to understand language and generate meaningful summary of small subsets of data. But we still need the explainability of feedback analysis. We need to be able to see where it makes a mistake. But most importantly, we need to teach it the nuances of our specific use cases, control what it considers and make adjustments. When we build tools for a human to collaborate with AI - human in the loop - we get the best results, true co-intelligence.

I’m excited to see what’s still to come and be part of the revolution we are experiencing.